tldr: if speech recognition is broken, load up bash inside the Flatpak runtime, and add the needed Python modules. This article explains how and why.

Kdenlive is a fine editor- as of writing, I’ve used it for every Veronica Explains episode on my YouTube and PeerTube channel. The editor has a useful speech recognition tool, which in my case, relies on the openai-whisper Python package.

For my scripted videos, I’ve relied on YouTube to sync my script – punctuation and all – against the final video. But for unscripted videos, recently I’ve used openai-whisper, and it does an excellent job of generating useful captions, which I can edit right from Kdenlive- saving me time when uploading my final work.

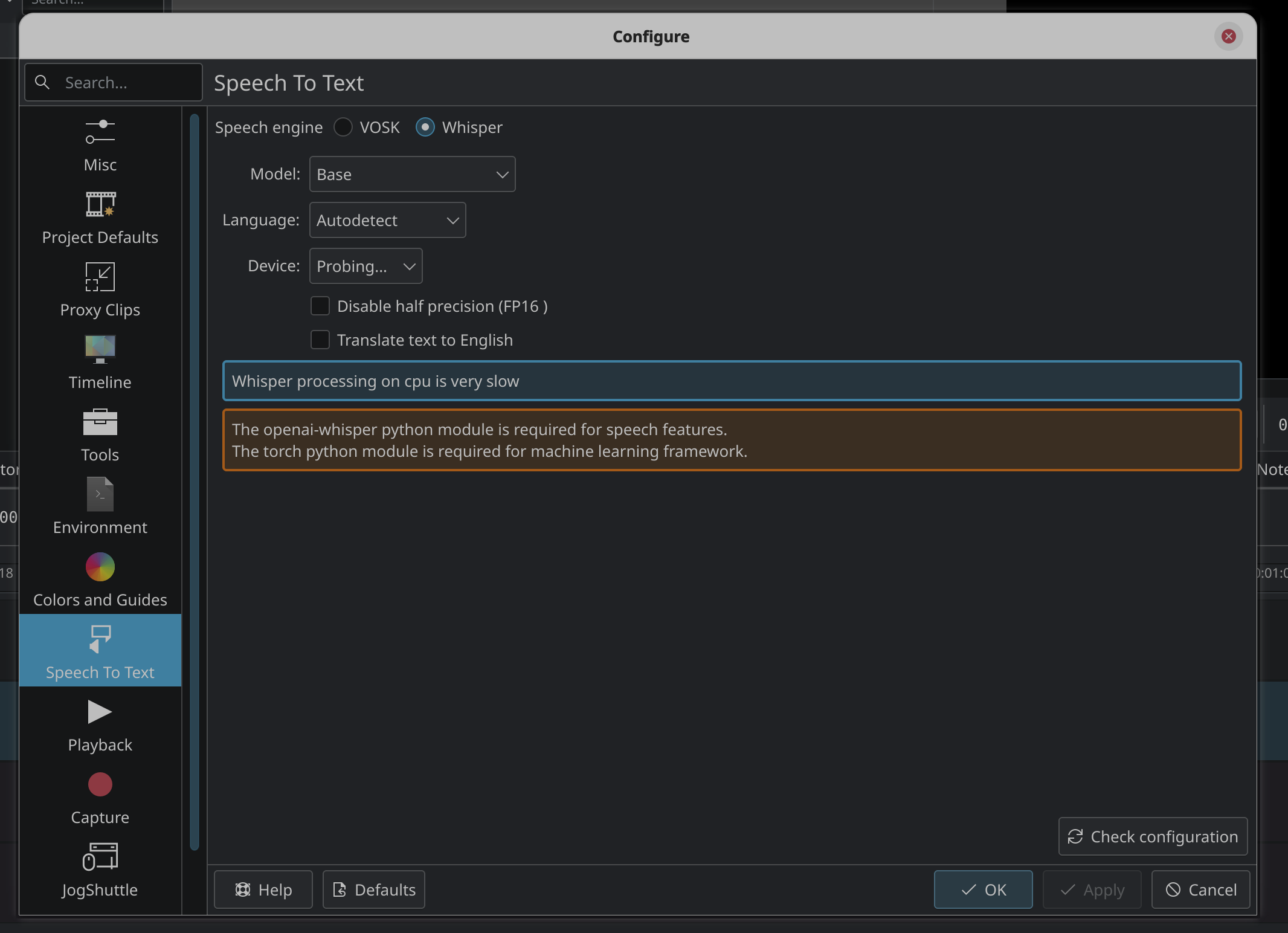

You can find the “speech recognition” features in the Kdenlive menu under Project > Subtitles > Speech Recognition. If it’s not set up, it should prompt you to automatically install the needed libraries with a “configure” button, which should take the steps to install the needed dependencies and set things up.

You have the choice between VOSK and Whisper– two Python modules with offline speech recognition engines. In my experience Whisper does a much better job with English punctuation and accuracy, so that’s what I’m focusing on here. My guess is VOSK would be a similar installation, though, and might be better for some languages or circumstances.

If Kdenlive is set up correctly, you should be able to simply pick a model, a language, the specific region to subtitle, and then hit Process to get started. After a bit, you should have subtitles in a new track in your timeline. From here, you can edit them like any other track, and export them in srt format, which you can then upload to PeerTube and/or YouTube.

That is, if Kdenlive is set up correctly.

In my experience, the native versions of Kdenlive have little problem with speech recognition- as long as you have Python and Pip installed and available, Kdenlive just kind of figures it out. For you Windows or Mac users- my guess is you have some PATH or system security issues to address, and I wish you well on your quest. But mine is a Linux journey, and Flatpak is my vessel.

Flatpak – my favorite way to Kdenlive, warts and all

The Flatpak version of Kdenlive is my preferred editor- I can get the same version of the software, in addition to all of the same plugins and defaults, regardless of my distro. And I recently switched from Debian Sid to Debian Stable on my editing rig… as of writing the apt version of Kdenlive is two major versions behind from Sid to Stable- this would be a nightmare without Flathub and Flatpak.

Luckily, because I use the Flathub version of Kdenlive, my projects loaded just fine, including all of my effects (in my experience, the packaged effects can differ from repo to repo). That stability-regardless-of-distro is exactly the reason I love Flatpak and Flathub as a way to make Linux more discoverable and usable for everyday people. My appreciation for Flatpak has even made r/linuxmemes.

What didn’t work was openai-whisper… and I forgot that I needed to bop into the bash prompt for Kdenlive’s Flatpak runtime to install the modules.

I wish Kdenlive could warn you about that, instead of just showing that the modules aren’t installed. Someday I’ll learn how to edit the Kdenlive docs and then I’ll hopefully add something valuable there. Maybe I’ll even pick up C++ and build it myself. Who knows?

Anyway, here’s how I fixed it so speech recognition in Kdenlive worked as expected. I used this process on Debian Bookworm, but I also tested it on (the beta of) Fedora Silverblue 40.

In the terminal, drop into the bash prompt for Kdenlive’s Flatpak.

This is done with:

flatpak run --command=/bin/bash org.kde.kdenliveTo break that down: flatpak run invokes the flatpak program to run an application. You can use that to run your Flatpak programs directly from the terminal (useful when running a window manager or building a startup script).

The program we’re running is org.kde.kdenlive, which is the application ID for the Kdenlive program.

Note: you can look up application IDs with flatpak list.

In between flatpak run and org.kde.kdenlive, we have --command=/bin/bash, which will tell Flatpak that we want to run the bash prompt inside the Kdenlive Flatpak runtime, the sandboxed environment available to the Flatpak version of the Kdenlive application.

This trick is quite useful when working with Flatpak programs- I use it periodically to tweak stuff behind-the-scenes.

Assuming it works, you should be greeted with a bash prompt. It won’t show you that you’re inside the Flatpak’s bash environment, so it might look like nothing happened. It would be nice if Flatpak gave you some kind of warning about this, similar to how venv works, but oh well.

One way that’s worked for me is to echo out $FLATPAK_ID. If echo $FLATPAK_ID comes back with org.kde.kdenlive, then you know you’re in the runtime for the Flatpak. Another trick that works for me (as a Debian user at least) is ls /: if this shows an “app” folder, I know I didn’t put that there- that’s something that comes from the Flatpak.

I think some versions of Flathub might force a custom $PS1 (that little terminal prompt that says who you are and what folder you’re in) when you’re operating inside the runtime- I tried it on a Silverblue VM and the bash prompt was much more clear.

Next, ensure Python’s “pip” is installed in the Flatpak’s runtime.

Once you’re in the runtime, this one is a pretty simple one-liner:

python -m ensurepipThis loads the ensurepip Python module, which should make sure Python has what it needs for the next command.

Last, install the packages Kdenlive needs.

I do this in a one liner:

python -m pip install -U openai-whisper torchHere’s what you’re doing: you’re using Python’s pip module to install the modules Kdenlive warned us about: openai-whisper and torch. The -U flag is for “upgrade”- that’ll tell Python to upgrade the packages and handle dependencies.

This command could take a long while- it’s got to download models, modules, and lots of stuff. Get a coffee and relax. Watch a PeerTube video about ripping video games. Better yet, call your mom- she misses you.

That’s it: Kdenlive should handle speech recognition now.

When everything’s complete, use the exit command to hop out of the Flatpak’s runtime, and then open up Kdenlive your usual way.

If all works as expected, you should now be able to run the speech recognition tool in Project > Subtitles > Speech Recognition. My suggestion is to test it on a small segment of audio- don’t start with a 3 hour video, as the process of generating subtitles takes time. Once you see that it’s working, try it on the larger project, and enjoy the efficiency!

I think I first learned of this trick from a Reddit post a while ago as I was trying out openai-whisper for the first time, so thanks to that person for posting about this.

Now, one thing to keep in mind is that your Python settings might get modified as Kdenlive’s devs push updates, and you might lose your settings. So, you might need to run these commands again after updating Kdenlive down the road. At least until the Kdenlive devs add a feature to handle this from the GUI, even when installed via Flathub.

Lastly, don’t be a jerk: add your damn captions.

For real, folks. Good subtitles and captions help everyone. For one, there’s the fact that folks who are deaf or hard-of-hearing deserve to be able to enjoy an accurate transcription of your video… Helping them out is just being a good person, and should be enough of a reason to care about your captions, full stop.

But since I know some folks aren’t moved by that argument, here’s another selfish one: good subtitles also benefit you directly as a creator.

Lots of folks watch videos on a mobile device with the sound off. If you have great subtitles, with proper punctuation, spelling, and pacing, your video is going to be much easier to digest. That means folks will watch it longer, and share it more readily. If your video can be enjoyed in a crowded room or at a workplace, guess what? More folks will watch your videos. More folks will subscribe to you. It’s not rocket science.

Plus, good subtitles, with proper spelling, probably help YouTube/Vimeo/AOL/whatever surface your video in search. I can’t prove that, yet, but the logic holds up to me- accurate words in the transcript should translate to a higher ranking on search. With the rise of LLM-enhanced search “features,” I’m guessing this will only continue to help your videos reach a wider audience- you’re speaking naturally, so your captions should reflect natural speech.

And speaking of a wider audience, your videos will perform better with folks who speak languages other than yours if you give them proper subtitles, instead of the terribly (un)punctuated mess of words that YouTube spits out by default. I get this comment a lot from folks who speak English as a second or third language, saying my subtitles are a critical piece of why they’re watching my stuff.

So yeah, don’t be a jerk- caption your work. Kdenlive can help with that.

Thanks for reading!

The written version of Veronica Explains is made possible by my Patrons and Ko-Fi members. This website has no ad revenue, and is powered by everyday readers like you. Sustaining membership starts at USD $2/month, and includes perks like a weekly member-only newsletter. Thank you for your support!